Navigating the AI Frontier: Illinois’s Bold Stance and the Future of Mental Healthcare

The world of artificial intelligence is evolving at a dizzying pace, promising to revolutionize nearly every aspect of our lives. From streamlining complex tasks to offering novel solutions for age-old problems, AI’s potential seems limitless. However, as this powerful technology becomes more integrated into our daily routines, critical questions arise about its application, particularly in sensitive areas like mental healthcare. Illinois has recently stepped into this complex arena with a decisive move, banning AI from providing direct therapy services. This significant legislative action not only reflects growing concerns about patient safety and efficacy but also signals a broader trend of states scrutinizing AI chatbots and their role in consumer well-being. Let’s dive into what this means for the future of mental health support and the broader landscape of AI regulation.

Illinois Draws a Line: AI Banned from Direct Mental Healthcare

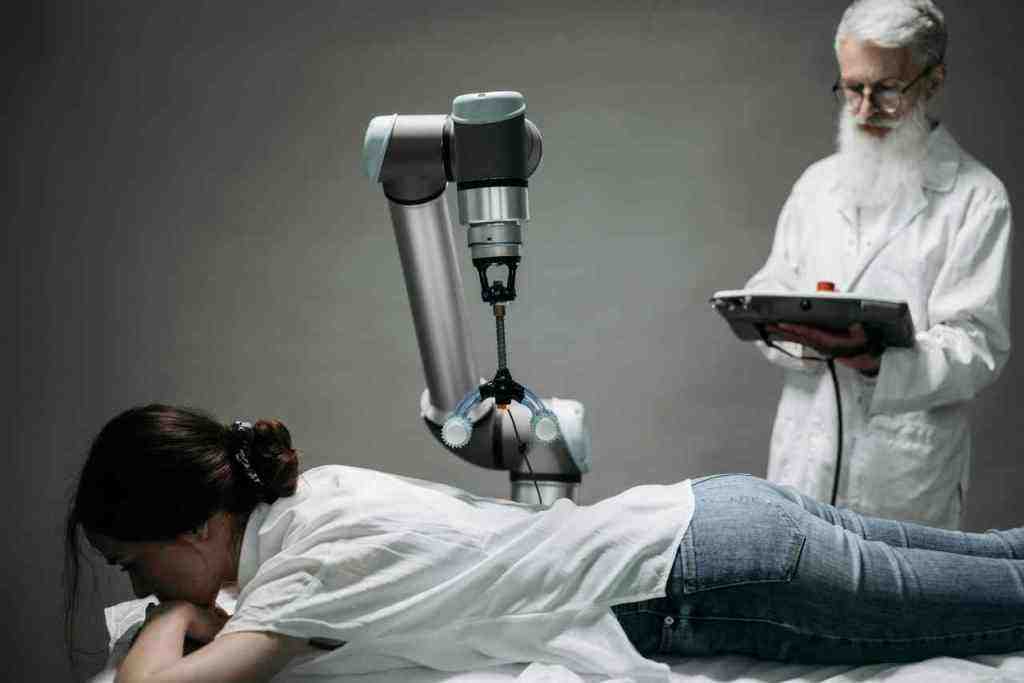

In a landmark decision, Illinois has officially prohibited the use of artificial intelligence in providing direct mental health therapy. This groundbreaking legislation, known as the Wellness and Oversight for Psychological Resources Act (HB1806), makes it unlawful for licensed therapists to utilize AI for making treatment decisions or directly communicating with clients. Furthermore, companies are barred from offering AI-powered therapy or marketing chatbots as therapy tools without the involvement of a licensed professional. While AI can still be employed for administrative tasks, such as scheduling or note-taking, its role in clinical decision-making and direct patient interaction has been strictly curtailed.

The Rationale: Prioritizing Patient Safety and Professional Oversight

The rationale behind Illinois’s ban is rooted in a deep-seated concern for patient safety and the irreplaceable value of human connection in mental healthcare. Policymakers and mental health advocates have raised alarms about the potential dangers of AI-driven therapy, particularly when these systems haven’t undergone rigorous review for safety and effectiveness. The lack of comprehensive understanding and regulation surrounding AI’s capabilities in such a sensitive field is a primary driver for this cautious approach. Key concerns include patient privacy, data security, and the potential for AI to offer inappropriate or even harmful advice. As Illinois Department of Financial and Professional Regulation Secretary Mario Treto Jr. stated, “The people of Illinois deserve quality healthcare from real, qualified professionals and not computer programs that pull information from all corners of the internet to generate responses that harm patients.”

Implications for the Mental Health Sector

This ban in Illinois could very well catalyze a broader reevaluation of how technology is integrated into mental healthcare nationwide. It prompts a demand for stricter guidelines and more robust oversight for all AI applications within the sector. While AI offers potential benefits, such as increasing accessibility and providing early intervention tools, the current regulatory landscape highlights the need for a balanced approach that prioritizes human oversight and ethical considerations. Many experts believe the future lies in a collaborative model, where AI tools augment, rather than replace, human therapists, thereby enhancing the overall quality of care.

A Growing Trend: States Scrutinize AI Chatbots

Illinois’s legislative action is not an isolated incident; it’s part of a burgeoning trend of states examining and regulating the use of AI chatbots, especially those offering advice or services that could impact public well-being. The primary impetus behind this scrutiny is consumer protection. As AI chatbots become more sophisticated and widely adopted, there’s a growing imperative to ensure they are transparent, reliable, and do not mislead users.. Find out more about Illinois AI therapy ban.

Key Concerns: Accuracy, Bias, and Lack of Human Oversight

Several specific concerns are driving this state-level attention. The accuracy of information provided by chatbots is a significant worry, as is their potential to perpetuate biases. Furthermore, the absence of human oversight when dealing with complex or sensitive issues is a critical gap. For instance, a high-profile case involved a chatbot that allegedly suggested drug use to a fictional recovering addict, underscoring the potential for harm without proper safeguards. States like Nevada and Utah have also implemented restrictions on AI companies offering therapy services, with Utah tightening regulations for AI use in mental health but stopping short of a complete ban. New York and Massachusetts are also considering bills that would require disclosures from chatbots, and California has already enacted laws requiring bots to clearly disclose their non-human nature.

The Push for Transparency and Consumer Protection

The overarching goal of these regulatory efforts is to enhance consumer protection. This includes ensuring that users are aware when they are interacting with an AI and that the information or services provided are accurate and unbiased. As The Washington Post has reported, the debate around AI regulation is complex, with policymakers striving to balance innovation with necessary safeguards. The lack of clear federal guidelines means that states are increasingly taking the lead in establishing these crucial boundaries.

The Evolving Landscape of Artificial Intelligence: Opportunities and Challenges

The field of artificial intelligence is experiencing unprecedented growth, with AI systems becoming increasingly adept at tasks once thought to be exclusively human domains. This rapid advancement presents both immense opportunities and significant challenges across various sectors.

AI’s Expanding Role Across Industries

From healthcare and finance to customer service and creative arts, AI is rapidly integrating into numerous aspects of daily life. In the workforce, AI is automating repetitive tasks, potentially leading to job displacement in areas like data entry and customer service. A study by the Institute for Public Policy Research indicated that up to 60% of administrative tasks are automatable. Conversely, AI is also creating new job opportunities in fields like data analytics and AI development, with estimates suggesting a net gain in jobs globally. However, the impact on the workforce is not uniform, and adapting to these changes will require new skill sets and a commitment to lifelong learning.. Find out more about AI chatbots mental health regulation guide.

The Imperative for Ethical AI Development

This rapid expansion underscores the critical need for ethical considerations in AI development. Ensuring that AI systems are developed and deployed responsibly is a growing priority for governments, organizations, and the public alike. Key ethical considerations include fairness, transparency, accountability, and the prevention of bias. As AI systems become more autonomous, the ethical implications of their decision-making processes become increasingly critical, especially in areas with significant human impact.

The Thorny Path of AI Regulation: Keeping Pace with Innovation

Regulating AI is a complex undertaking, primarily due to the rapid pace at which the technology evolves, often outstripping the ability of regulatory bodies to establish effective oversight. This “pacing problem” is a significant hurdle, making it challenging to create regulations that are both comprehensive and adaptable.

Defining the Scope and Ensuring Accountability

Determining what constitutes a regulated service when provided by AI can be intricate, as the lines between information provision, advice, and professional service often blur. Furthermore, establishing clear lines of accountability when AI systems make errors or cause harm presents another major challenge. Who is responsible when an AI provides faulty advice, or when an autonomous system causes an accident? These questions are at the forefront of the AI regulation debate.

The Balancing Act: Innovation vs. Oversight

Regulators face the delicate task of balancing the need for innovation with the imperative for oversight. Overregulation could stifle the development of beneficial AI technologies, while underregulation could lead to significant societal risks. The Brookings Institution highlights that effective AI oversight must be risk-based and targeted, focusing on mitigating the effects of the technology rather than micromanaging the technology itself. As more states address AI regulation, there’s an opportunity for collaboration and the sharing of best practices to create more harmonized policies.

Public Perception and Trust: The Foundation of AI Integration. Find out more about state scrutiny AI services tips.

Public awareness of AI capabilities is growing, leading to a mix of excitement about its potential and apprehension about its risks. For AI to be successfully integrated into society, building and maintaining public trust is paramount.

Building Trust Through Transparency and Reliability

Transparency and demonstrable reliability are key to fostering user trust in AI systems. Studies have shown that while the public acknowledges the convenience and benefits of AI in healthcare, concerns about personal privacy, data security, and regulation remain significant. Many people are uncomfortable with healthcare providers relying heavily on AI for their own care and express a preference for human oversight in medical decision-making.

The Media’s Role in Shaping Perceptions

Media coverage plays a crucial role in shaping public perception of AI, highlighting both its groundbreaking achievements and its potential pitfalls. Publications like The Washington Post are actively reporting on emerging AI trends and providing in-depth analysis of the complex policy debates surrounding AI, including the challenges of regulation and ethical considerations.

The Future of AI in Mental Health: Collaboration and Safeguards

Despite the current regulatory actions, AI holds significant potential benefits for mental health, including increased accessibility to support, early intervention tools, and personalized coping strategies. However, the path forward requires careful consideration of ethical guidelines and robust safeguards.

Human-AI Collaboration: The Way Forward. Find out more about consumer protection AI chatbots strategies.

Many experts believe the future of AI in mental health lies in a collaborative approach, where AI tools augment, rather than replace, human therapists. This human-AI collaboration can enhance the overall quality of care by leveraging AI’s capabilities for tasks like data analysis and administrative support, freeing up human professionals to focus on empathy, nuanced understanding, and complex therapeutic interventions.

Developing Essential Safeguards for AI Mental Health Tools

Moving forward, the focus will likely be on developing robust safeguards, clear ethical guidelines, and comprehensive regulatory frameworks to ensure that AI tools used in mental health are safe, effective, and beneficial for users. This includes addressing concerns about algorithmic bias, data privacy, and the need for continuous human oversight.

Cross-State Collaboration and the Need for Harmonized Policies

As more states address AI regulation, there is a growing opportunity for cross-state collaboration and the sharing of best practices to create more harmonized policies nationwide. Given the borderless nature of AI services, cooperation among states is vital to ensure a consistent and effective regulatory approach that protects all citizens. While state-level actions are crucial, there is also a growing discussion about the potential need for federal oversight to establish comprehensive guidelines for AI across the country.

Conclusion: Charting a Responsible Course for AI in Society

Illinois’s ban on AI therapists is a significant marker in the ongoing conversation about AI’s role in our lives. It highlights the critical need for careful consideration, robust regulation, and a commitment to ethical development, especially in sensitive sectors like mental healthcare. As AI continues to evolve, the challenges of regulation, public trust, and ethical implementation will remain at the forefront. The future likely holds a path of human-AI collaboration, where technology serves as a powerful tool to augment human capabilities, rather than replace them entirely. By learning from pioneering state initiatives, fostering cross-state cooperation, and prioritizing transparency and accountability, we can work towards harnessing the transformative potential of AI responsibly, ensuring it benefits society while mitigating its inherent risks.

What are your thoughts on the use of AI in mental healthcare? Share your insights in the comments below!