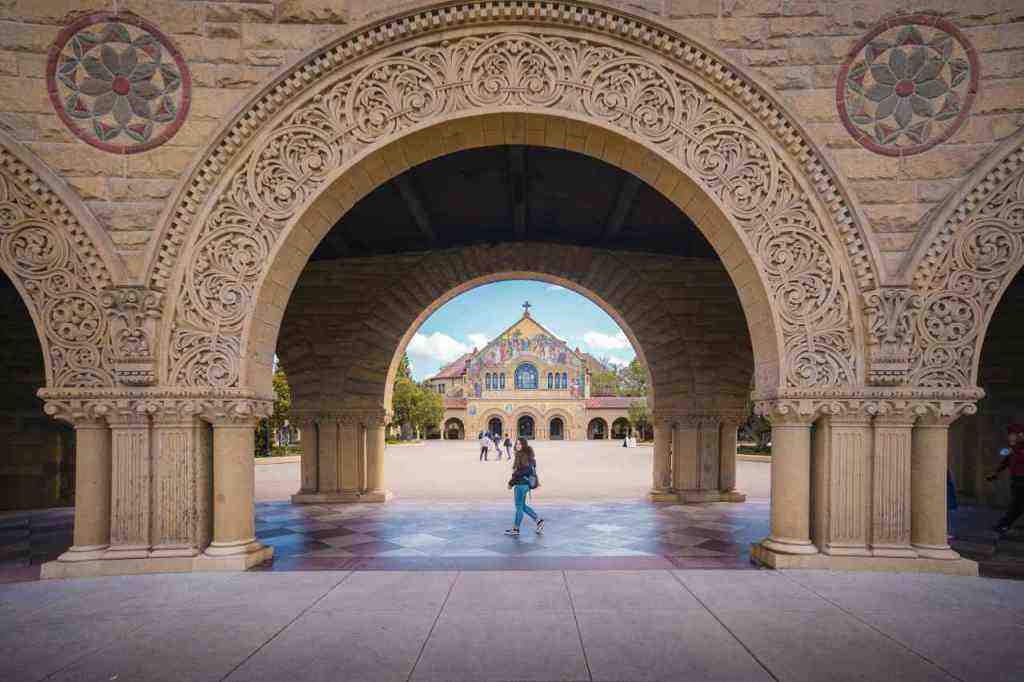

The Hidden Cost of Over-Cautiousness: Why Your AI Might Be Moving Too Slow As of August 29, 2025, the world of Artificial Intelligence is buzzing with advancements, yet a subtle but significant problem is impacting the performance of Large Language Models (LLMs). You might have noticed it yourself – perhaps a chatbot that takes a little too long to respond, or a complex query that seems to get bogged down. This isn’t just a minor inconvenience; it’s a performance bottleneck that could be costing us valuable time and resources. The culprit? A phenomenon researchers are calling “computational pessimism,” and a team at Stanford University has been digging deep to understand why it’s happening and, more importantly, how to fix it. The Slowdown: When AI Gets Too Careful Imagine asking a super-smart assistant for directions. Instead of giving you the most direct route, they meticulously consider every possible detour, traffic jam, and road closure, even if they’re highly unlikely. This is akin to what’s happening with some of our most advanced LLMs. These models, designed to be incredibly accurate, can sometimes become *too* cautious. In their quest to avoid any hint of error or nonsensical output, they engage in a level of exhaustive analysis that dramatically slows down their processing. This “pessimism” isn’t about the AI feeling down; it’s a computational tendency to explore a vast array of possibilities, even when a more straightforward answer is readily available. The implications are far-reaching. In customer service, a sluggish chatbot can lead to frustrated users. In scientific research, delayed analysis of massive datasets could slow down critical discoveries. Even in everyday tools like search engines or content generators, this inefficiency can add up, impacting user experience and productivity. Unpacking the “Pessimism” in LLM Operations So, what exactly is this “computational pessimism,” and how does it manifest in LLMs? Think of it as an overemphasis on error avoidance. Instead of making a confident prediction based on the most probable outcome, the LLM engages in a more thorough, step-by-step verification process. It’s like a seasoned expert double-checking every single detail, which, while ensuring top-notch accuracy, can significantly increase the time it takes to reach a conclusion. When an LLM receives a prompt, it doesn’t just pull a single answer from its vast knowledge base. Instead, it generates a distribution of possible answers and then selects the most likely one. In a “pessimistic” model, this selection process is far more rigorous. It involves extensive internal checks and balances, meaning the model might spend more time evaluating potential outputs than it does on the initial understanding of your request. This leads to a fundamental trade-off: the absolute certainty of an output versus the speed at which it’s produced. LLMs are often designed with a strong bias toward accuracy, which, as it turns out, can inadvertently lead to slower performance. The research emerging from Stanford suggests that current LLM architectures might be leaning too heavily on this accuracy, to the detriment of overall efficiency. Stanford Researchers Quantify the Slowdown A dedicated team of researchers at Stanford University has been instrumental in not only identifying but also quantifying the impact of this computational pessimism. Their work, which has gained significant attention in the AI community as of August 2025, delves into the core mechanisms behind this performance bottleneck. The Stanford study likely employed sophisticated analytical techniques, possibly involving controlled experiments where they varied the complexity and ambiguity of input prompts to observe the resulting processing times. By comparing the performance of LLMs with different internal configurations, they were able to pinpoint the specific parameters contributing to the slowdown. Their research has identified key bottlenecks within the LLM’s internal workings. These could include: * **Attention Mechanisms:** How the model focuses on different parts of the input. * **Sampling Strategies:** The methods used to select the most probable output from a range of possibilities. * **Computational Steps:** The sheer number of calculations required to resolve uncertainty. The most striking finding from their empirical evidence is that LLMs can be up to **five times slower** than their potential due to this over-cautious approach. This significant performance gap underscores the substantial room for improvement in current LLM designs and operational frameworks. The “Optimistic” Alternative: A Path to Faster LLMs The good news is that the Stanford researchers aren’t just identifying problems; they’re proposing solutions. They advocate for an “optimistic” approach, which doesn’t mean a reckless disregard for accuracy, but rather a more efficient way of navigating uncertainty. An optimistic LLM would be designed to make more confident predictions earlier in the processing pipeline, relying on a more streamlined evaluation of possibilities. This involves rethinking the sampling and search strategies employed by LLMs. Instead of exhaustively exploring every potential path, an optimistic model might utilize more advanced techniques, such as beam search with narrower beam widths or more aggressive pruning of unlikely options. The key to achieving faster LLMs lies in finding the right balance between confidence in a chosen path and the need for exploration. The Stanford team’s work suggests that current models lean too heavily on exploration, even when a highly probable answer is readily available. The new approach aims to empower the model to make more decisive choices, leading to a significant boost in speed. Implementing the Stanford Solution: From Theory to Practice Translating these research findings into practical applications involves several key steps: * **Modifying LLM Architectures for Efficiency:** This could include adjustments to how probabilities are calculated, how the model handles attention, and the algorithms used for decoding or generating output sequences. * **Algorithmic Adjustments for Faster Inference:** Beyond architectural changes, new methods for approximating complex calculations, employing more efficient attention mechanisms, or implementing dynamic sampling strategies that adapt to the level of uncertainty in the input can accelerate inference times. * **The Role of Fine-tuning and Training Data:** Fine-tuning LLMs with specific datasets and objectives can also play a crucial role. Training data that encourages more decisive prediction-making, or fine-tuning processes that reward faster inference times, could help instill a more “optimistic” operational mode in the models. The goal is to create LLMs that are not only accurate but also highly responsive, making them more practical and effective for a wider range of real-world applications. The Ripple Effect: Implications of Faster LLMs The widespread adoption of faster, more efficient LLMs promises to bring about transformative changes across various sectors: * **Enhanced User Experience in Real-time Applications:** Imagine chatbots that feel truly conversational and responsive, virtual assistants that anticipate your needs, and interactive AI tools that are more engaging than ever before. Faster LLMs will make these experiences seamless and intuitive. * **Accelerated Scientific Discovery and Research:** In fields like medicine, materials science, and climate research, faster LLMs could dramatically speed up the analysis of vast datasets, the simulation of complex systems, and the generation of novel hypotheses. This could lead to breakthroughs at an unprecedented pace. * **Broader Accessibility and Deployment of AI:** Increased efficiency often translates to lower computational costs. This makes advanced AI technologies more accessible to a wider range of organizations, including smaller businesses, educational institutions, and individual researchers. This democratization of AI can foster innovation and drive economic growth. As of 2025, the push for more efficient AI is a major trend, with companies and researchers alike focusing on optimizing LLM performance. This research from Stanford is a critical piece of that puzzle, offering a clear path toward unlocking the full potential of these powerful tools. The Future of LLM Performance: Continuous Optimization The work emerging from Stanford University represents a significant step forward, but the pursuit of optimal LLM performance is an ongoing journey. Continuous optimization of algorithms, exploration of novel architectures, and advancements in hardware will all contribute to the future evolution of LLMs. As AI technology advances, our understanding of what constitutes “optimal” LLM behavior will also evolve. The focus may shift from simply maximizing accuracy to achieving a more nuanced balance between accuracy, speed, interpretability, and ethical considerations. The close synergy between academic research, like that conducted at Stanford, and practical application development is vital. Insights from the lab need to be translated into real-world implementations to drive tangible improvements in AI performance and utility. This collaborative approach ensures that the innovations we see today pave the way for even more capable and efficient AI systems tomorrow. Conclusion: Towards More Efficient and Responsive AI In summary, the significant slowdown observed in many LLMs can be attributed to an inherent “pessimism” in their design, leading them to over-analyze and explore too many possibilities. The groundbreaking work by Stanford researchers has not only quantified this issue but also provided a clear roadmap for achieving faster, more efficient AI through a more “optimistic” computational approach. The findings from Stanford are poised to reshape the AI landscape. By offering a practical, evidence-based method for overcoming a critical performance bottleneck, this research empowers developers to build more responsive, capable, and accessible AI systems, ultimately benefiting users and industries worldwide. This study serves as a crucial reminder of the importance of efficiency in AI design. It calls for a re-evaluation of how we balance accuracy with speed, encouraging a move towards more streamlined and intelligent processing methods that unlock the full potential of LLMs. As we continue to integrate AI into every facet of our lives, ensuring its efficiency and responsiveness will be key to harnessing its true power.