US Intelligence Embraces AI for Surveillance Boost in Twenty Twenty-Four

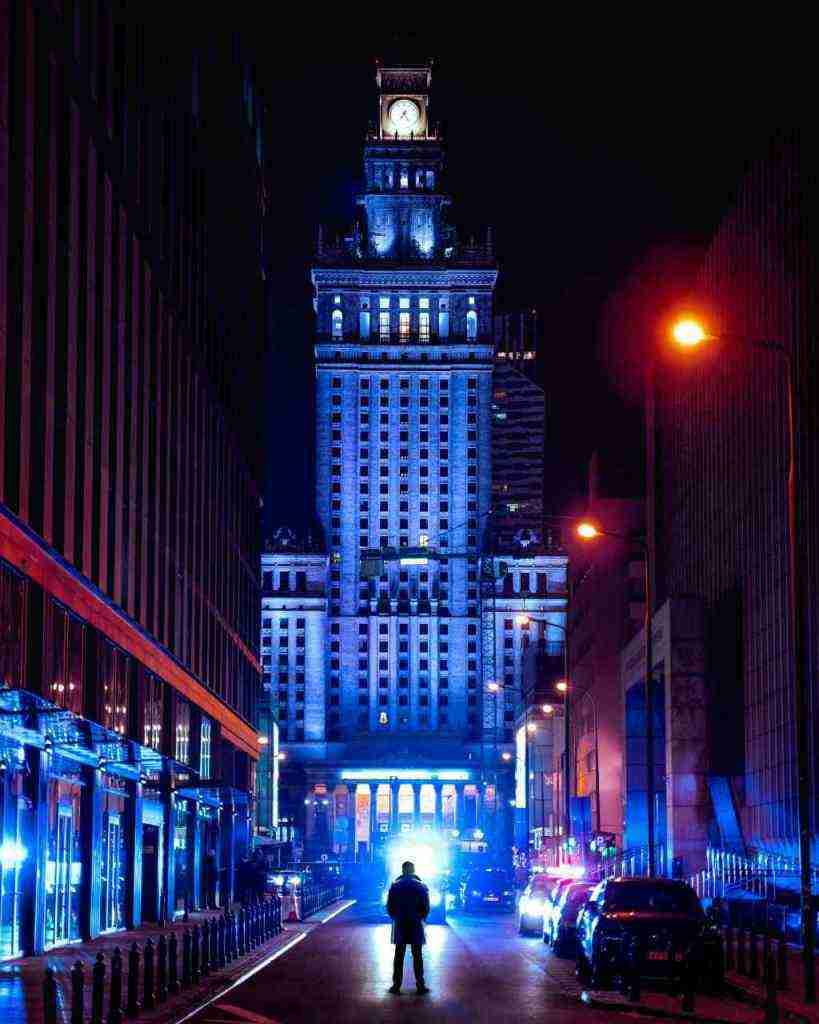

The year is upon us, folks. Twenty twenty-four. Flying cars? Not quite. But how about AI snooping on us from every street corner? Yeah, we’re getting closer to that dystopian reality. The US intelligence community, feeling the heat to keep up with the mountains of data from our increasingly surveilled lives, is turning to the big guns: AI and machine learning.

Uncle Sam Wants You (To Build Its AI Spy Network)

In a move straight outta Silicon Valley, US intelligence agencies have put out a call—a formal request for proposals, for you acronym lovers—to all the tech whizzes out there. They’re on the hunt for cutting-edge software, the kind that can turn all that raw surveillance footage into, well, actionable intel. Think Minority Report but, hopefully, less pre-crime and more like, “Hey, that dude’s been loitering for a while, maybe check it out?”

So, what exactly is on Uncle Sam’s AI shopping list? Let’s break it down:

- Automated Object Recognition: Imagine software that can not only spot a face in a crowd but tell you who that face belongs to, all in real-time. We’re talking CCTV cameras, traffic cameras, even those creepy drones everyone’s freaking out about. Oh, and let’s not forget the body cameras our boys in blue are rocking these days. All that footage? Prime AI real estate.

- Real-Time Tracking: So, you’ve identified a person of interest (let’s call him Bob). Now, you want to keep tabs on Bob. Not just on one camera feed, but across an entire city. Maybe even across state lines. That’s where real-time tracking comes in. AI that can follow Bob’s every move, switching seamlessly between cameras like some kinda high-tech surveillance ballet.

- Anomaly Detection: This is where things get really spooky. We’re talking about AI that can analyze patterns, spot unusual behaviors, basically anything that deviates from the norm. Think of it like that friend who always knows when you’re lying, but on a much larger, creepier scale.

- Threat Assessment: Okay, so the AI has spotted some shady stuff. Now what? Is it just Bob forgetting where he parked his car, or is it something more sinister? That’s where threat assessment comes in. This AI is all about ranking risks, deciding which flagged activities need immediate attention and which ones can wait. You know, prioritize the potential bomb threats over the jaywalkers.

Why the Sudden AI Obsession?

You might be thinking, “Wait, hasn’t the government been using AI for surveillance for, like, forever?” And you wouldn’t be entirely wrong. But here’s the thing: the game has changed. We’re drowning in data, people. Surveillance cameras are everywhere, spitting out footage twenty-four-seven. Drones are buzzing around like digital mosquitos. Our phones are practically tracking our every move (let’s be real, they totally are). It’s too much, even for the feds, to handle.

Human analysts, bless their cotton socks, are straight-up overwhelmed. They’re sifting through hours of footage, trying to find that one needle in a haystack of boring daily life. It’s no wonder they’re missing stuff. And when lives are on the line, missing stuff is not an option.

Which brings us to the big kahuna: proactive security. Nobody wants to be caught with their pants down when the next big threat rears its ugly head. The powers that be are feeling the pressure to get ahead of the curve, to anticipate threats before they even happen. And how do they plan to do that? You guessed it: AI.

But Wait, Is Big Brother Watching a Little Too Closely?

Now, before we all run for the hills and start wearing tinfoil hats, let’s take a breath. While the potential of AI to keep us safe is undeniable, let’s not forget the flip side of this digital coin. We’re talking about ethical concerns, folks. The kind that keep privacy advocates up at night.

First on the list: good old-fashioned privacy violations. We’re talking about AI that can recognize your face in a crowd, track your movements, maybe even analyze your behavior to predict what you’ll do next. Sounds like something out of a Black Mirror episode, right? It’s a slippery slope, my friends, and we need to be careful not to slide headfirst into a surveillance state.

Then there’s the issue of bias in algorithms. AI is only as good as the data it’s fed. And let’s face it, the real world is full of biases, both conscious and unconscious. If we’re not careful, we could end up with AI that perpetuates and even amplifies those biases, leading to unfair targeting of certain individuals or groups. Imagine an AI that’s been trained on data that disproportionately flags people of color as suspicious. Yeah, not cool.

And finally, the dreaded mission creep. Remember how we talked about AI being used for, say, identifying potential threats? Well, what’s to stop that same technology from being used for other purposes? Purposes that might not be so, shall we say, ethical? Think about it: AI that’s supposed to be tracking terrorists could easily be repurposed to monitor political dissidents, journalists, or anyone else the powers that be deem a nuisance. And that, my friends, is a recipe for disaster.

The Future of Surveillance: A Brave New World or a Digital Dystopia?

So, where do we go from here? The future of AI-powered surveillance is still being written, and the outcome of this RFP could very well determine whether we end up with a society that values security over freedom or one that manages to strike a balance between the two.

This isn’t just some abstract debate for the tech elite, folks. This is about our lives, our freedoms, our fundamental rights. We need to be asking the tough questions, demanding transparency from our government, and pushing for safeguards that protect our privacy and civil liberties. We need to be having those uncomfortable conversations about the trade-offs between security and freedom, about the kind of world we want to live in. Because if we don’t, the algorithms will decide for us.

The genie’s out of the bottle, folks. AI is here to stay. But it’s up to us, the citizens of this digital age, to make sure it’s used for good, not for turning our world into some kind of Orwellian nightmare.

What Can You Do About It?

Alright, so you’re fired up about AI surveillance. You’re ready to storm the Capitol, tinfoil hat in hand. But hold your horses, brave citizen! There are more productive ways to make your voice heard.

- Get Informed: Knowledge is power, people! Read up on AI surveillance, the technologies involved, and the potential implications. The more you know, the better equipped you’ll be to spot the BS and call it out.

- Support Privacy Organizations: There are organizations out there fighting the good fight for digital privacy. Support them! Donate, volunteer, spread the word!

- Contact Your Representatives: Yeah, yeah, calling your congressman might seem about as effective as yelling at a brick wall. But hey, you never know. Let them know you care about this issue. Tell them you want to see strong privacy protections and oversight when it comes to AI surveillance.

- Start a Conversation: Talk to your friends, your family, that weird neighbor who always wears the same bathrobe. The more people who are aware of this issue, the better. Let’s get people talking, debating, and demanding better from our leaders.

The future of AI surveillance is in our hands. Let’s make sure we build a world where technology empowers us, not enslaves us.