Specifics of the Hardware Focus and Component Integration

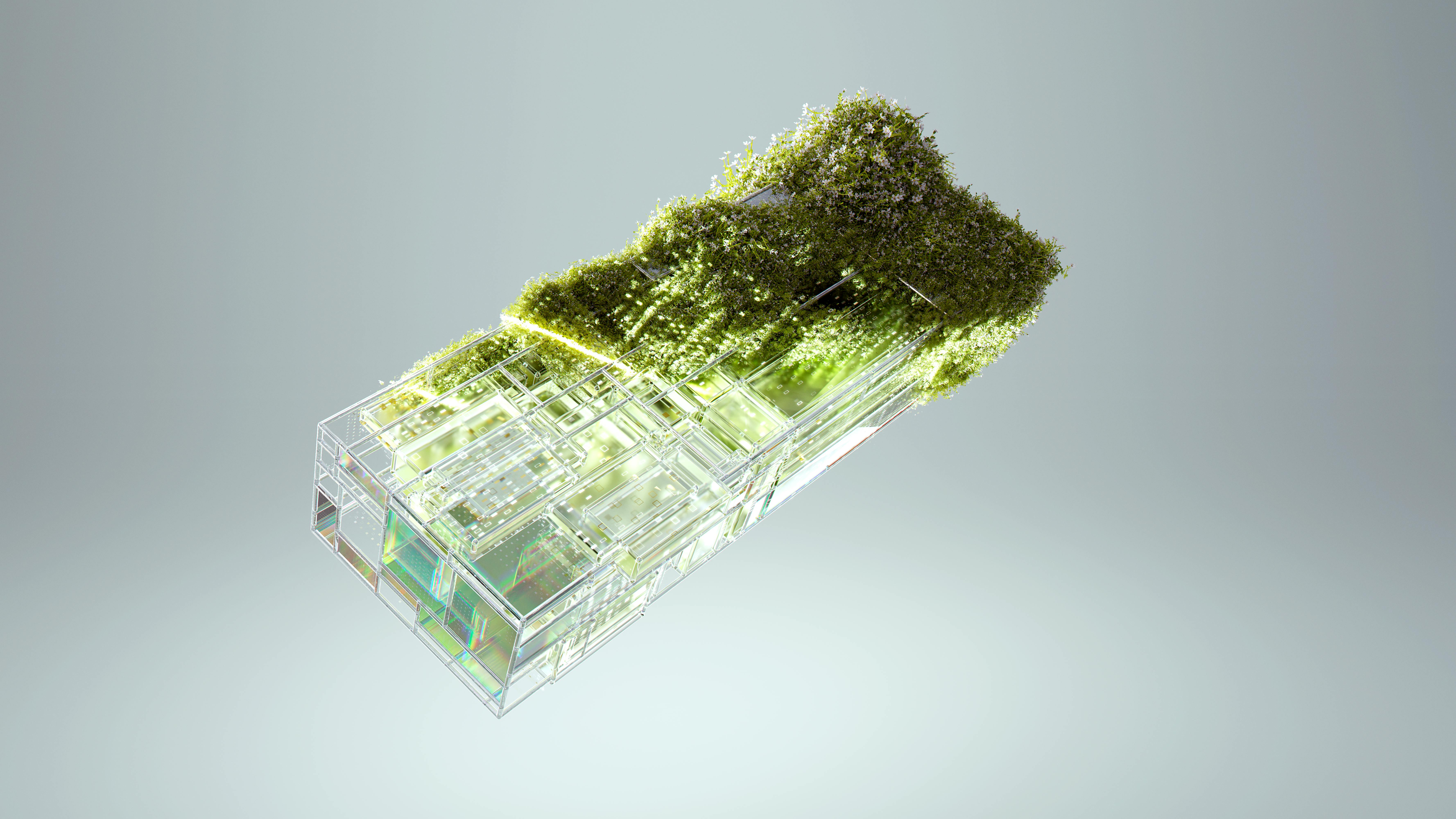

When we talk about “hardware,” we aren’t just talking about stacking boxes in a warehouse. We are talking about engineering systems where thermal dynamics and interconnectivity are the primary limiting factors to performance. The Foxconn collaboration gets granular, specifying the manufacturing of the very components that make an AI cluster function at peak efficiency.

Critical Physical Infrastructure Elements Targeted for Production

The manufacturing scope detailed in the deal is comprehensive, targeting the entire apparatus required for a functioning, high-density AI cluster that can support the next generation of models. This mandate goes far beyond simply assembling standard server chassis; it’s about custom-engineering the environment for extreme compute density.

The production focus includes:

- Server Racks: The structural backbone, designed in parallel with OpenAI’s roadmap to handle the specialized weight and cooling requirements of next-gen GPUs.

- Advanced Cabling: The complex, high-bandwidth cabling required for ultra-high-speed, low-latency interconnectivity between thousands of processing units—essential for distributed model training.. Find out more about OpenAI Foxconn U.S. hardware manufacturing deal.

- Networking Components: Specialized networking equipment, likely including custom-designed switches and interface cards, necessary for cluster-wide communication that minimizes data bottlenecks.

- Power Systems: Robust, customized power systems engineered for superior efficiency and density to feed these energy-hungry machines reliably.

- Liquid Cooling: Migrating rapidly toward direct-to-chip liquid cooling or full immersion systems to dissipate heat far more effectively than traditional air cooling.

- Tailored Airflow: More sophisticated air-flow management techniques specifically tailored for densely packed server designs that are physically constrained by component proximity.

- Improve Rack Architecture for US Manufacturing: Redesigning the physical container (the rack) to be easier, faster, and more cost-effective to build using domestic U.S. labor and materials.

- Broaden Sourcing: Working to include more domestic chipsets and suppliers across the bill of materials, moving beyond just a handful of global leaders for every single part.

- Expand Localized Assembly: Ensuring the final, critical integration steps happen stateside, protecting intellectual property and ensuring immediate quality control over high-value systems.

- Diversify Your Compute Strategy: Do not become overly reliant on a single hyperscaler or a single chip architecture. Start evaluating alternative cloud providers (like the neoclouds being supported by Stargate partners) and exploring non-GPU acceleration options now.

- Focus on Data Efficiency: Since power and cooling are the physical bottlenecks, the highest ROI will come from optimizing *how* you use compute. Can your inference load be compressed? Can your model training be made data-efficient? Efficiency in your code can be more valuable than a few extra racks of hardware.

- Map Your Component Dependency: Just as OpenAI is mapping the rack, you should map your application’s dependency on specific hardware components (e.g., high-bandwidth memory, specific interconnect standards). Where is your single point of failure in your *software* stack?

- Embrace Hardware-Aware Software Development: Engineers need to stop treating hardware as an infinite resource. Developing with the constraints of high-density, high-power systems in mind—understanding latency, memory access patterns, and thermal throttling—is no longer optional.

This detailed attention to every physical piece suggests a desire for total control over the quality and performance characteristics of the final deployed unit. It’s the difference between buying a stock car and commissioning a bespoke Formula 1 chassis—every millisecond and every watt matters when you are deploying infrastructure costing billions.

The Role of Advanced Cooling and Power Systems

As computational density spirals upward—with rack power requirements increasing year-over-year—thermal management is no longer a secondary concern; it’s the primary engineering challenge threatening to cap AI progress. The collaboration explicitly names work on cooling systems.

For next-generation AI hardware, this almost certainly means a pivot toward:. Find out more about OpenAI Foxconn U.S. hardware manufacturing deal guide.

The energy demands are equally stark. As of late 2025, AI systems are estimated to consume energy rivaling that of medium-sized industrialized nations. The specialized power systems Foxconn is tasked with manufacturing will need to be engineered for maximum efficiency and power density. Why? To minimize wasted energy—which translates directly to lower operational costs—and maximize the uptime and performance of the AI compute housed within. This isn’t just good business; it’s an essential step toward making the infrastructure sustainably scalable.

Anticipated Economic and Technological Ramifications

When a single entity announces infrastructure commitments in the hundreds of billions, the effects ripple across the entire economic ecosystem. It’s a massive deflationary force on *potential* compute scarcity, but it simultaneously fuels inflationary concerns about market exuberance.

Market Sentiment Shifts in Technology and Financial Sectors. Find out more about OpenAI Foxconn U.S. hardware manufacturing deal tips.

The confirmation of this major domestic manufacturing commitment sent immediate, high-frequency ripples through both the technology stock market and the broader financial ecosystem in the days following the announcement.

The Upside Signal: For hardware-centric giants like Nvidia, whose Graphics Processing Units (GPUs) remain the lifeblood of these data centers, this news reinforces the strength of underlying demand. Guaranteed paths to infrastructure scale, even if they involve custom sourcing, mean a reliable, multi-year revenue stream for their highest-margin products. This typically fuels positive market sentiment, validating the entire semiconductor thesis for the foreseeable future.

The Downside Concern: Conversely, the sheer magnitude of these capital commitments across the sector—totaling well over a trillion dollars for OpenAI alone—has intensified underlying concerns about potential AI bubble dynamics. Are valuations outpacing immediate, proven return on investment? Analysts are watching the success of these partnerships in streamlining deployment—like the Foxconn deal—as a critical bellwether for the sector’s operational efficiency. If they can deploy this hardware efficiently and profitably, the bubble narrative loses steam; if deployment lags, the financial pressure on the ecosystem becomes palpable.

Actionable Takeaway for Investors: Don’t just watch the software company’s stock; monitor the operational efficiency metrics of their hardware partners. A smooth Foxconn rollout signals execution capability, which is currently valued more highly than just having a good algorithm.

Energy Demands and the Sustainability Conversation

The physical reality of building out infrastructure to support an AI economy of this magnitude inevitably brings the conversation back to the planet’s electrical grids and environmental impact. The requirement for massive, resilient power delivery, as touched upon in the component focus, underscores the strain AI build-outs place on existing utilities, with demands measured in the tens of gigawatts.

It’s no longer enough to just train the model; one must power the model reliably, and increasingly, cleanly. A typical AI-focused hyperscaler can consume as much electricity annually as 100,000 average households. This puts immense pressure on local grids, sometimes leading to significant rate increases for residential and small business customers who ultimately bear the cost of necessary grid upgrades.. Find out more about OpenAI Foxconn U.S. hardware manufacturing deal strategies.

This is where the partnership’s focus on highly efficient cooling and power systems manufactured domestically becomes not just an economic or geopolitical imperative, but an environmental one as well. If OpenAI can drastically cut the energy waste in cooling and power conversion through Foxconn’s custom manufacturing—moving the needle on efficiency by even a few percentage points across thousands of racks—the aggregated energy savings would be equivalent to taking entire power plants offline. The long-term success of this venture will be measured not only by the speed of model training but by its ability to operate this vast new fleet of compute resources with optimized energy utilization, aligning industrial growth with stringent sustainability expectations. This deep integration of manufacturing, design, and operational efficiency aims to make the next wave of AI infrastructure not just powerful, but sustainably scalable.

The Geopolitical Ripple: Reindustrialization and Supply Chain Control

While the technical specifications are compelling, the geopolitical subtext of this Foxconn partnership cannot be overstated. The language used by executives—”generational opportunity to reindustrialise America” and “strengthen US leadership”—is intentional and signals a strategic pivot that has massive policy implications.

Securing the “Last Mile” of Hardware Deployment

For years, the United States has been dominant in designing the world’s most advanced chips and writing the world’s most advanced software. However, the “last mile”—the scalable, reliable, high-volume assembly of the complete, integrated server system—has largely been consolidated in Asia. This concentration created a single point of failure in the path to achieving true AI dominance.

The Foxconn collaboration aims to change that equation by working to:. Find out more about OpenAI Foxconn U.S. hardware manufacturing deal overview.

This is about supply chain resilience wrapped in economic opportunity. It’s a strategic move to ensure that the physical apparatus powering the most important technology of the decade is not vulnerable to long-distance shipping delays or international trade friction. You can read more about the broader trends in US AI supply chain initiatives here: US Technology Manufacturing Policy.

The Interplay with Chipmaker Investments

It’s also vital to understand how this domestic focus interacts with the chip deals. OpenAI’s partnership with Broadcom to co-develop accelerators is an upstream play, securing the most advanced processing technology. The Foxconn deal is the mid-stream play—the integration. This ensures that the custom chips designed in collaboration with Broadcom or sourced from Nvidia/AMD can be immediately integrated into the Foxconn-designed, US-manufactured rack architecture, ready to ship to the Stargate sites.. Find out more about OpenAI multi-trillion dollar infrastructure roadmap definition guide.

This synergy means faster time-to-deployment. Traditional hardware cycles are slow; the AI model cycle is frantic. By pre-aligning the software need (OpenAI’s insight) with the manufacturing capability (Foxconn’s engineering) through parallel design, the companies can bring new systems online months, perhaps years, faster than traditional procurement models allow. This speed-to-market advantage is perhaps the most potent weapon in the current AI race.

Actionable Insights for the Broader Tech Ecosystem

If you are a technology leader, a founder, or even an engineer working in adjacent fields, what does this massive infrastructure deployment signal for your strategy moving forward?

Practical Steps to Prepare for the Next Compute Wave:

Conclusion: The Era of Physical AI

The news cycle moves fast, but the infrastructure buildout that OpenAI, backed by billions in capital and now partnered with manufacturing giants like Foxconn, is undertaking is moving slowly and deliberately. As of today, November 23, 2025, the message is clear: The AI revolution has hit the physical plane. It’s about gigawatts, gigabytes, and gigatons of hardware assembly.

The ambition is staggering—$1.4 trillion aimed at 30GW of compute, underpinned by partnerships with Oracle, SoftBank, AMD, and Broadcom, all now complemented by a domestic hardware fabrication alliance with Foxconn. This is not just a race for smarter algorithms; it is a race to build, power, and control the world’s new foundational layer of commerce and intelligence. The success or failure of this massive physical deployment will determine not just the future of OpenAI, but the pace at which the entire AI economy evolves over the next decade.

What part of this physical AI pivot concerns you the most—the energy drain, the geopolitical shift, or the sheer scale of the private capital involved? Let us know in the comments below. Your perspective on this massive infrastructure shift is what drives the conversation forward.