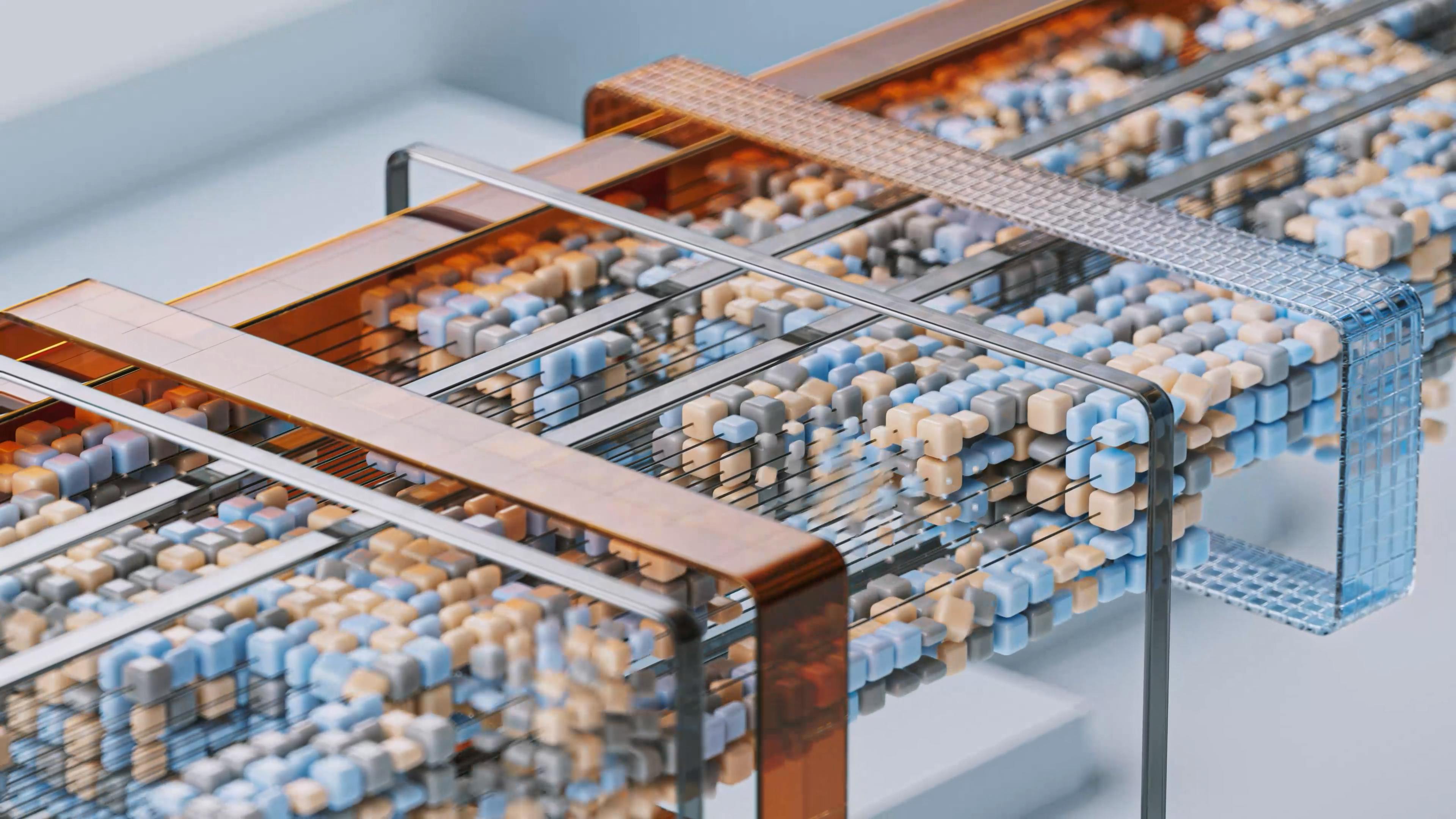

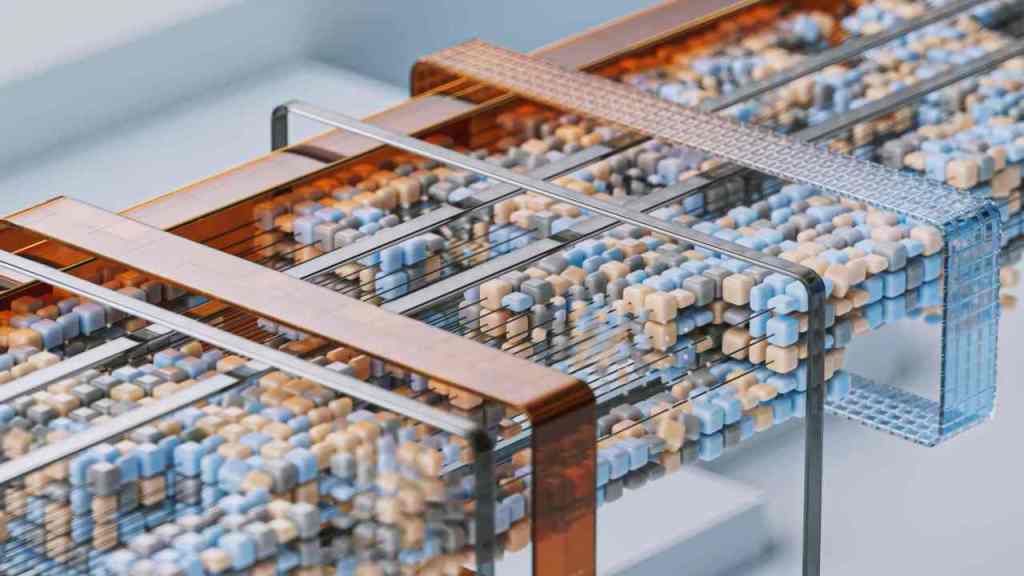

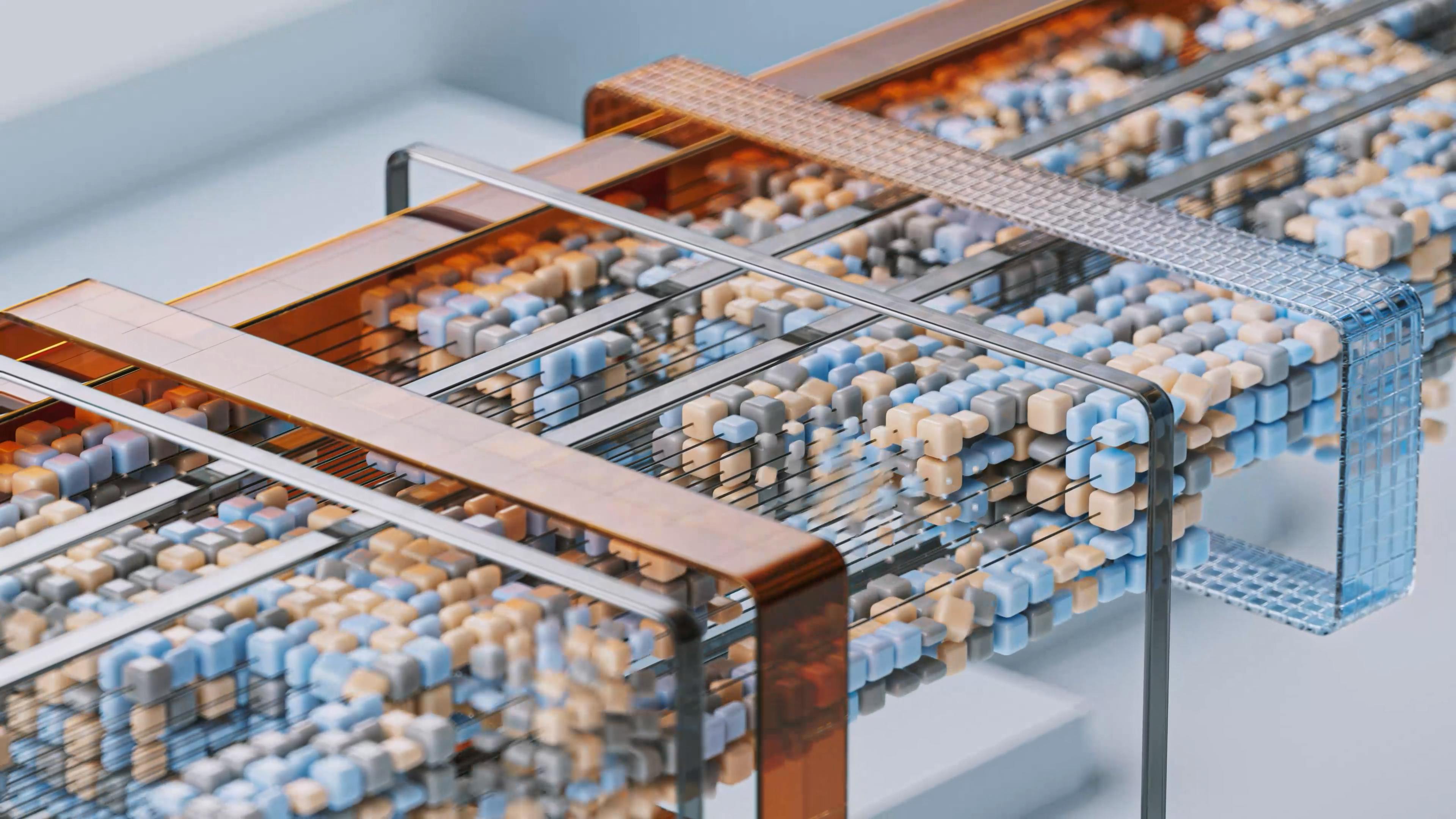

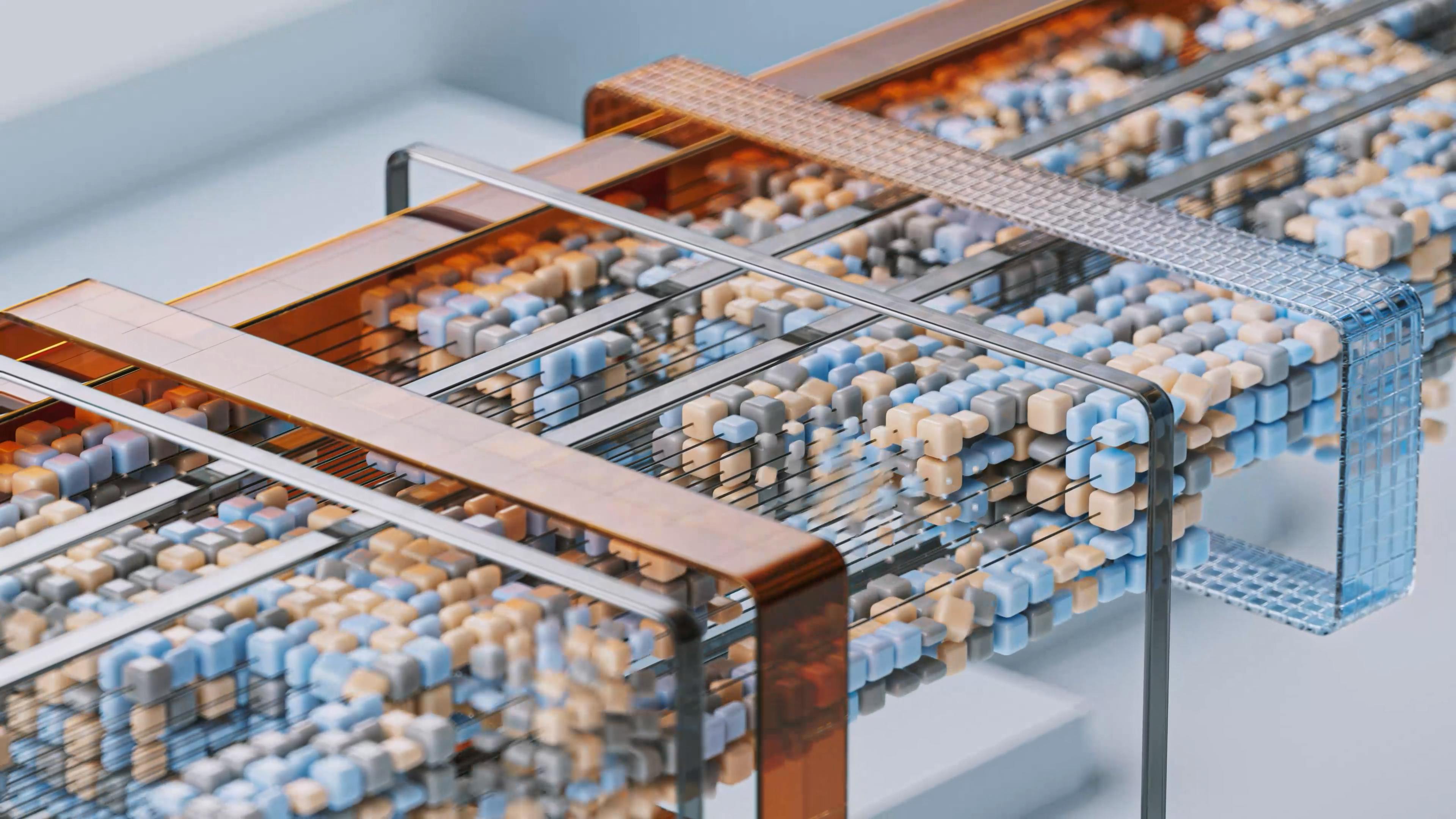

The Engine Room of Tomorrow: How Advanced Hardware Fuels the AI Revolution As artificial intelligence continues its breathtaking expansion, weaving itself into the fabric of our daily lives and industries, it’s easy to marvel at the intelligence itself. We interact with sophisticated language models, marvel at AI-generated art, and benefit from AI-driven efficiencies. But behind every intelligent query, every predictive model, and every groundbreaking discovery lies a physical foundation: advanced hardware. This isn’t just about faster computers; it’s about a fundamental reshaping of computational power, driven by unprecedented user adoption and the relentless pursuit of artificial general intelligence. As of September 26, 2025, the landscape of AI hardware is dynamic, characterized by massive investments, groundbreaking innovations, and critical challenges. Technological Backbone: The Role of Advanced Hardware The sheer scale and complexity of modern AI models, especially large language models (LLMs), necessitate a revolution in computing hardware. Traditional processors simply cannot keep pace with the parallel processing and massive data throughput required for training and running these sophisticated systems. This has led to an arms race in silicon design and data center architecture, built to handle the immense computational demands of AI. The Critical Importance of Next-Generation Processors At the very core of any advanced AI computing system are its processors. Today’s AI development is inextricably linked to the capabilities of specialized hardware, particularly processors engineered for parallel processing and high-speed data throughput. These next-generation chips are not merely enhancements; they are fundamental prerequisites for unlocking the next level of AI performance. They are meticulously designed to handle the massive parallel computations essential for training neural networks and executing inference tasks with remarkable speed and efficiency. Without access to these powerful computational engines, the ambitious AI models envisioned for the future would remain confined to theoretical discussions, unable to be brought into practical fruition. The evolution from general-purpose CPUs to specialized AI accelerators signifies a paradigm shift, where the hardware is tailored precisely to the unique demands of machine learning workloads. This engineering focus ensures that the foundational elements of AI – perception, reasoning, and generation – can be achieved at a scale and speed previously unimaginable. Millions of GPUs Fueling Innovation The hardware overwhelmingly driving this AI revolution is the Graphics Processing Unit (GPU). While originally conceived for rendering complex graphics in video games and visual applications, GPUs have proven to be exceptionally adept at performing the parallel computations that form the bedrock of deep learning. As AI models grow exponentially in size and complexity, the demand for GPUs has skyrocketed. This has spurred the production of specialized, AI-focused GPUs that offer even greater processing power and efficiency. The infrastructure build-out is staggering. Industry reports from 2025 indicate plans for deploying millions of these advanced GPUs within vast data center clusters. For instance, NVIDIA’s GB200 Blackwell platform is at the forefront of this wave, with significant production and shipment ramp-ups occurring throughout 2025. By April 2025 alone, manufacturers were shipping approximately 1,500 GB200 NVL72 server racks, a substantial increase from the entire first quarter of the year. While some analyst forecasts have been adjusted, predicting around 25,000 to 35,000 GB200 NVL72 racks for the year, this still translates to the deployment of millions of individual GPUs, with total GB200 shipments estimated to reach around 2.52 million units. These racks, each densely packed with processors, form distributed supercomputing networks. The sheer quantity signifies the industry’s commitment to providing the raw computational power needed to train and operate the most advanced AI systems, effectively creating “AI factories” capable of producing intelligent systems at an industrial scale. Each GB200 NVL72 rack can command a price tag of approximately $3 million, highlighting the immense capital investment fueling this sector and contributing to an estimated $105 billion in potential revenue for NVIDIA from this specific platform alone. The Role of Data Center Architecture and Networking Beyond individual processors, the overall architecture of the data centers themselves plays a crucial role. These facilities are being meticulously designed from the ground up to optimize for AI workloads. This includes high-density server racks that maximize computing power within a given space, advanced cooling systems that can handle extreme heat loads, and ultra-high-speed networking to ensure seamless data flow. The ability to move vast amounts of data rapidly between processors, memory, and storage is paramount. Low-latency, high-bandwidth networking is essential to prevent bottlenecks that could significantly slow down or even halt complex computational tasks. This integrated approach—encompassing hardware, architecture, and networking—ensures that the entire system operates cohesively, maximizing efficiency and performance for the intensive demands of AI model training and deployment. A significant development in data center architecture in 2025 is the widespread adoption of advanced cooling technologies. As AI chips generate immense heat, traditional air cooling methods are proving inadequate. Liquid cooling solutions, including immersion cooling (where servers are submerged in dielectric fluids) and direct-to-chip liquid cooling, are becoming standard. Major players like Google have deployed liquid-cooled TPU pods, quadrupling compute density, while Microsoft is committed to zero-water-waste cooling systems for new data centers, often leveraging technologies that manage thermal loads without continuous water input. This shift is not just about managing heat; it’s about enabling higher rack densities, reducing energy consumption from fans, and supporting the next generation of high-power AI processors. The global data center liquid cooling market is projected to grow at a compound annual growth rate of over 20%. Furthermore, AI itself is being employed to optimize cooling operations, allowing for real-time monitoring, predictive maintenance, and dynamic adjustments to match workload variations, ensuring efficiency and minimizing energy waste. Driving Forces: User Growth and Evolving AI Capabilities The immense expansion of computing power is not happening in a vacuum. It is directly fueled by two primary forces: the explosive growth in the user base of advanced AI tools and the rapidly evolving capabilities and applications of AI across virtually every sector. The Exponential User Base of Advanced AI Tools A primary impetus behind the massive expansion of computing power is the phenomenal growth in the adoption and usage of advanced AI tools. Services like ChatGPT, for instance, have seen an explosive increase in their user base. Projections for 2025 indicate global AI adoption will reach an impressive 378.8 million users, with an unprecedented 64.4 million new users anticipated that year alone [cite:3 (Global AI Adoption)]. This widespread engagement signifies a fundamental shift in how individuals, businesses, and developers interact with and rely on artificial intelligence. Across the business world, AI integration is becoming the norm. As of 2025, a significant 72% of companies worldwide now use AI in at least one business function, a substantial increase from just 55% a year prior [cite:5 (State of AI)]. This surge is particularly pronounced in IT and marketing, with generative AI use also climbing rapidly, now employed by 71% of organizations in at least one department, led by C-level executives [cite:5 (State of AI)]. The accessibility and utility of these AI systems have democratized access to powerful computational capabilities, leading to a surge in demand for their services. This user-driven expansion directly translates into a need for exponentially more computing resources to support the ongoing operations, development, and wider deployment of these increasingly popular AI applications. The AI hardware market itself, a significant indicator of this demand, was valued at approximately $66.8 billion in 2025 and is projected for substantial growth, expected to reach $296.3 billion by 2034 [cite:3 (AI Hardware Market Size)]. From Curiosity to Essential Utility: AI’s Expanding Role The utility of AI is rapidly evolving beyond novel applications and early experiments. Advanced AI is becoming an indispensable tool across a vast spectrum of industries and daily life. From aiding in scientific research and drug discovery to automating complex business processes, personalizing education, and enhancing creative endeavors, AI’s practical applications are multiplying. This transition from a niche technology to an essential utility amplifies the demand for reliable and powerful computing infrastructure. Consider the impact across sectors: in healthcare, AI is transforming diagnostics and genomics; in finance, it’s revolutionizing fraud detection and algorithmic trading; in manufacturing, it’s optimizing supply chains and predictive maintenance. The development of specialized chips like Neural Processing Units (NPUs) is also expanding AI’s reach to edge devices, enabling on-device processing of complex models in smartphones and IoT devices without constant cloud reliance [cite:3 (AI Hardware Market Size)]. As AI becomes more deeply integrated into critical systems and workflows, the need for robust, scalable, and always-available computational resources intensifies. This broad adoption across diverse fields underscores the foundational importance of compute power in enabling the AI-driven economy of the future, where efficiency, personalization, and advanced problem-solving are paramount. The Path to Superintelligence and Beyond The long-term vision driving the demand for immense computational resources often extends to the pursuit of artificial general intelligence (AGI) and potentially superintelligence. These ambitious goals require AI systems that possess capabilities far exceeding human intelligence across a wide range of tasks. Achieving such advanced states of artificial intelligence necessitates training models on an unprecedented scale, involving vast datasets and immense computational cycles that dwarf current requirements. The current infrastructure build-outs are thus not solely for present-day applications but are strategically designed to provide the foundational computing power required for future breakthroughs in AI research. This includes the potential development of systems that could fundamentally alter our understanding of intelligence and technology, pushing the boundaries of what is computationally possible. The ongoing race to develop more powerful AI models ensures that the demand for cutting-edge processors, massive GPU clusters, and advanced data center infrastructure will continue to accelerate, setting the stage for transformative advancements in the decades to come. Navigating the Challenges and Future Outlook The rapid expansion of AI hardware and infrastructure is not without its significant challenges. Addressing these issues is critical for ensuring that the AI revolution is sustainable, economically viable, and environmentally responsible. Addressing Environmental Considerations in Hyperscale Build-Outs The construction and operation of massive data centers present significant environmental challenges that require careful consideration and mitigation. The immense energy consumption associated with training and running AI models has direct implications for carbon footprints and resource utilization. While AI can be a powerful tool for combating climate change through data analysis and optimization, its own operational demands are substantial. By the end of the decade, AI could be consuming up to 4% of the world’s electricity, a figure comparable to the power usage of some small countries [cite:2 (AI and Environment)]. Each interaction with a generative AI model can release grams of carbon dioxide, and training large models can emit hundreds of tons [cite:2 (AI and Environment)]. Furthermore, these facilities require vast quantities of fresh water for cooling systems, a resource that is increasingly scarce in many regions. The electronic waste generated by the constant cycle of hardware upgrades also poses a substantial environmental concern, as discarded processors can contribute to toxic waste streams. Addressing these issues necessitates the development of energy-efficient technologies, sustainable water management practices, responsible e-waste recycling programs, and the prioritization of renewable energy sources to minimize the ecological impact of this technological expansion. Many organizations are actively working to balance AI’s benefits with its environmental costs, recognizing that responsible AI development must align with sustainability goals [cite:1, 2 (Environmental impact of AI)]. The Economic Landscape: Investment, Speculation, and Sustainability The colossal investments being made in AI infrastructure, estimated to be in the hundreds of billions of dollars, have also sparked discussions about the economic sustainability and potential for financial bubbles within the AI sector. While proponents argue that these investments are essential for technological progress and are accompanied by robust demand, skeptics raise concerns about long-term profitability and the possibility of overinvestment. Some analyses suggest that current revenue generation may not keep pace with the escalating infrastructure costs required to support AI services. Addressing these concerns requires careful financial planning, efficient operational management, and a clear path to monetizing AI capabilities in a way that justifies the massive capital expenditure. The goal is to ensure that the development of AI infrastructure is not only technologically feasible but also economically viable and sustainable in the long run, supporting the growth of the AI economy without fostering speculative excess. The AI hardware market, however, shows strong resilience, with significant growth projected, indicating a strong underlying demand that supports ongoing investment [cite:3 (AI Hardware Market Size)]. The Future of Compute-Centric Economies The ongoing expansion of computing power signals a fundamental shift towards what could be termed “compute-centric economies.” In such economies, access to and mastery of computational resources become primary drivers of economic growth and competitive advantage. The ability to harness immense processing power for AI development, innovation, and deployment will likely define a nation’s or a company’s standing in the global landscape. This evolution suggests that future economic paradigms will be deeply intertwined with the advancements in AI infrastructure, highlighting the strategic importance of continued investment, innovation, and responsible development in the field of computing power. The build-out of these massive data centers is, therefore, not just about technology; it is about architecting the economic and societal structures of the future. As AI continues to permeate every aspect of our lives and economies, the hardware that powers it will remain at the forefront of progress, shaping innovation, productivity, and our collective future. *** **Key Takeaways and Actionable Insights:** *

Hardware is Foundational: The AI revolution is built on a physical backbone of specialized processors and massive data centers. Continuous innovation in GPUs, AI accelerators, and data center design is critical. *

User Demand Drives Scale: The exponential growth in AI users and enterprise adoption is the primary driver for the massive investments in computing power. *

Embrace Efficiency and Sustainability: The significant energy and water demands of AI hardware necessitate a focus on energy-efficient technologies, renewable energy sources, and sustainable cooling solutions. *

AI as a Solution: While AI has environmental impacts, it also holds immense potential to solve environmental challenges, from climate modeling to resource optimization. Balancing AI’s footprint with its problem-solving capabilities is key. *

Prepare for a Compute-Centric Future: Economic and societal paradigms are shifting towards computation as a core resource. Understanding and adapting to this trend will be vital for future success. The technological backbone supporting artificial intelligence is evolving at an unprecedented pace. By understanding the hardware, the forces driving its growth, and the challenges ahead, we can better navigate the path toward a more intelligent and sustainable future.