The Breakthrough Technology: Hierarchical Reasoning Model

The philosophical divide found its physical manifestation in the Hierarchical Reasoning Model (HRM). This is where theory meets demonstrable, quantifiable superiority over the established guard.

Architectural Elegance Over Brute Force Parameter Count

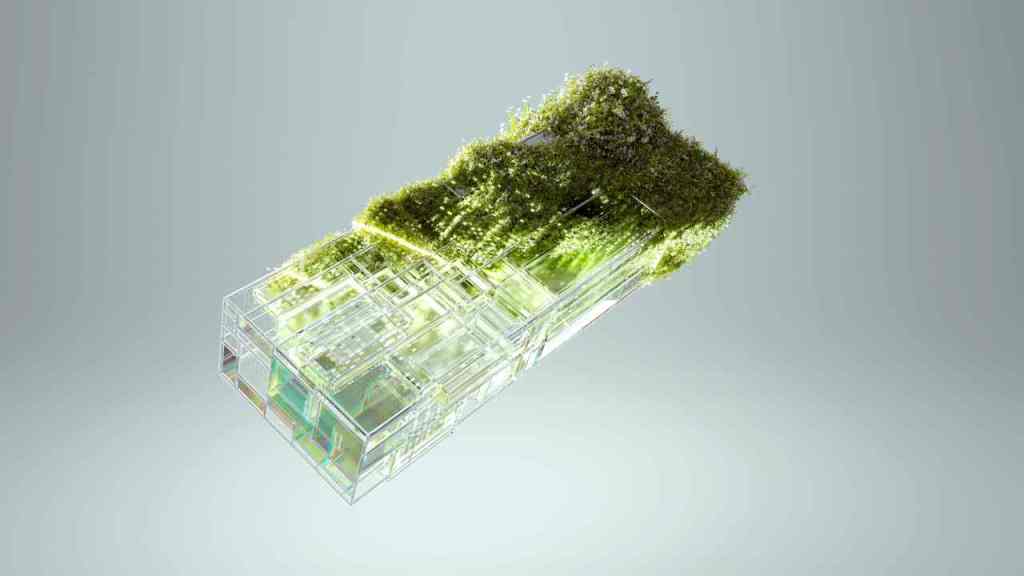

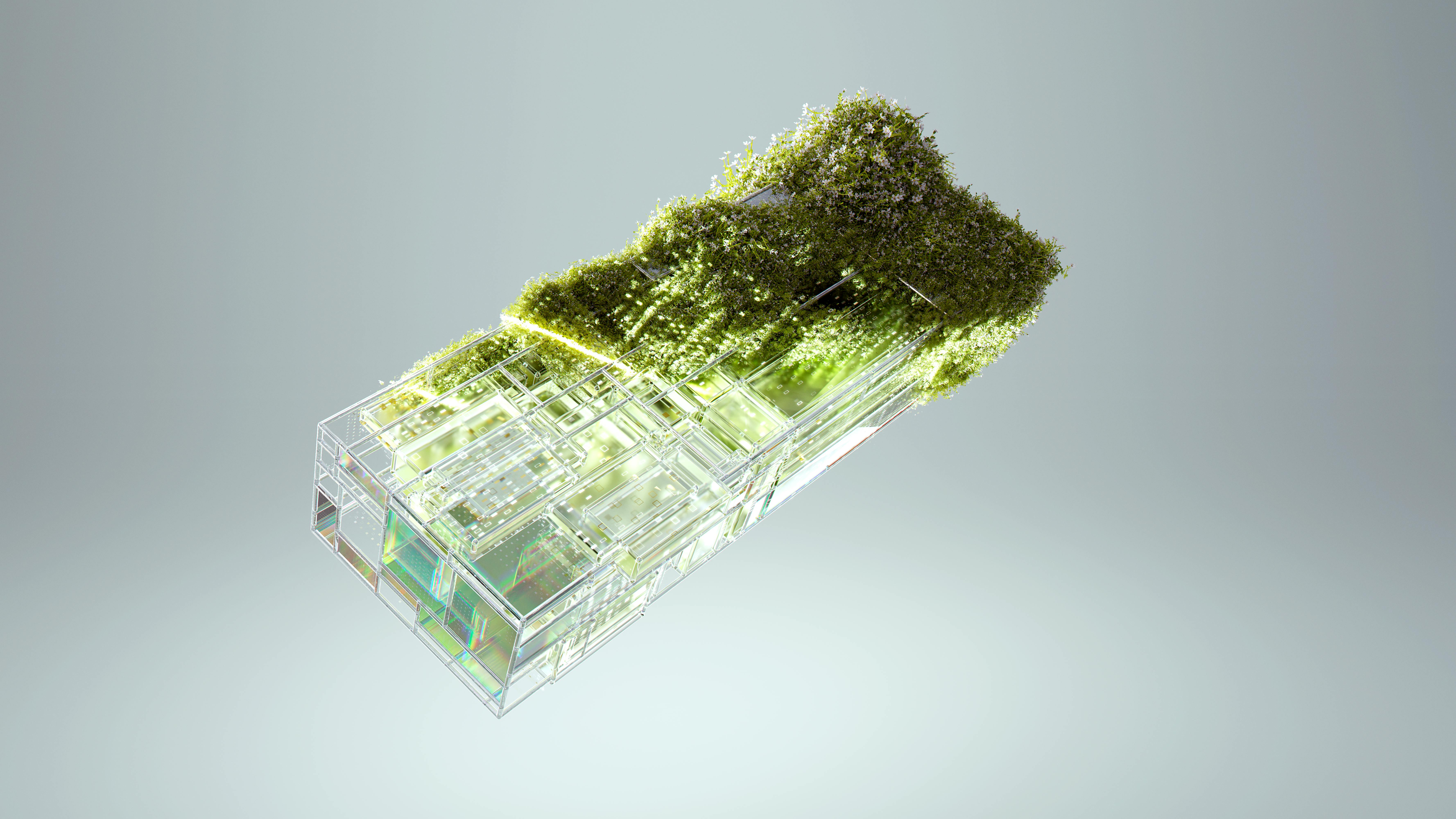

The physical manifestation of this architectural pivot is the Hierarchical Reasoning Model, or HRM. The name itself suggests a design philosophy centered on structured thought processes, mirroring how biological systems often manage complex tasks through layered, interconnected modules, each responsible for a specific level of abstraction or processing. This model moves away from the flat, monolithic structure common in today’s largest AI systems, proposing instead a dynamic, organized computational flow.

The elegance of this design lies in its ability to delegate tasks and combine intermediate results in a meaningful, non-linear fashion, a hallmark of human thought that large, undifferentiated neural networks often struggle to replicate reliably. This deliberate engineering choice imbues the HRM with an inherent capacity for systematic exploration of solutions, rather than relying on statistical memorization of similar historical problems. The emphasis on hierarchy implies a system capable of breaking down a large, intractable problem into smaller, more manageable sub-problems, solving each recursively, and then integrating the findings into a coherent final answer. This structural advantage is precisely what allowed the small prototype to tackle problems of logic and spatial reasoning that require genuine step-by-step deduction. The model achieves this through two interdependent recurrent networks: a slower, high-level module (the Controller/H-module) for abstract planning, and a faster, low-level module (the Worker/L-module) for detailed computation. This nested computational loop is what prevents the quick convergence that trips up traditional scaling approaches.

“The brain sustains lengthy, coherent chains of reasoning with remarkable efficiency in a latent space, without constant translation back to language.” – The Sapient Intelligence research team, summarizing the motivation behind HRM.

The Striking Metric of Efficiency: Twenty-Seven Million Parameters

Perhaps the most startling piece of data supporting the efficacy of the HRM approach is the scale at which it was demonstrated. The prototype that achieved these world-beating results was powered by a mere twenty-seven million parameters. To put this figure into the context of the industry at the time is crucial: contemporary cutting-edge models boasted parameter counts in the hundreds of billions, often approaching the trillion mark, and required immense, dedicated clusters of high-end Graphics Processing Units (GPUs) to even run inference, let alone train. Some estimates put recent flagship LLMs like GPT-5 successor models in the multi-trillion parameter range.

The fact that a system with less than one ten-thousandth of the parameters of some of its rivals could outperform them so comprehensively on reasoning tasks delivered a powerful shockwave. This efficiency metric shattered the assumption that computational scale was the primary, non-negotiable prerequisite for advanced performance. It suggested that perhaps the industry had been trapped in an arms race of resource expenditure, neglecting the fundamental science of efficient intelligence encoding. This low parameter count not only confirms the superiority of the architecture but also promises a future where powerful AI is far more accessible, requiring less specialized and expensive hardware infrastructure. This challenges the entire investment narrative around investment paradigms in machine learning.. Find out more about AI founders rejected Elon Musk multimillion-dollar offer.

Efficiency vs. Scale – A Quick Look:

This efficiency also means Sapient Intelligence can boast far fewer hallucinations than conventional LLMs and already matches state-of-the-art performance in specialized fields like weather prediction and quantitative trading.

Empirical Validation on Critical Reasoning Benchmarks

The claims made by Sapient Intelligence were not based on subjective evaluation or niche performance metrics; they were validated by demonstrable success on standardized, rigorous abstract reasoning challenges. For the AI community, these benchmarks are the ultimate arbiters of true cognitive leap.

Superior Performance in Abstract Problem Solving. Find out more about AI founders rejected Elon Musk multimillion-dollar offer guide.

The system was specifically pitted against benchmarks designed to test not just knowledge recall, but the capacity for novel, abstract thought—the very area where large language models have historically shown fragility. The results painted a clear picture of superiority. When confronted with tasks requiring genuine, multi-step logical inference, the Hierarchical Reasoning Model exhibited a depth of understanding that its larger contemporaries simply could not match. This abstract performance is the closest current metric we have to a litmus test for genuine machine thinking, moving beyond mere mimicry of human language patterns.

The successful navigation of these abstract frontiers validated the founders’ philosophical rejection of the scaling hypothesis, confirming that a small, well-designed system could possess greater cognitive reach than a massive, generalized one. This success firmly placed the HRM in a class of its own regarding its core intellectual capability. When you see an AI solve a problem that requires genuine insight rather than just pattern matching, you are witnessing a new breed of intelligence. The HRM, by design, is equipped for this insight.

Dominance in Structured Logic Puzzles and Navigation

The empirical evidence was further cemented by the model’s triumph in tackling structured, constraint-based problems that demand meticulous planning and adherence to rules. The results specifically highlighted its ability to solve advanced Sudoku puzzles, a task that requires combinatorial logic and backtracking, and to optimally navigate complex spatial environments, specifically thirty by thirty mazes. These tasks are inherently difficult for pure language models because they require maintaining a state, understanding constraints, and systematically testing possibilities—actions that require a level of persistent, structured planning often lacking in standard auto-regressive models.

Furthermore, the system achieved a score of forty point three percent on the highly respected ARC-AGI-1 benchmark. This specific quantitative result served as a definitive marker of its advanced reasoning capabilities against the existing field. While the ARC-AGI-1 benchmark has been a major focus for many labs, it’s worth noting that competitors were also making progress on its successor, ARC-AGI-2, which is even harder for AI reasoning systems. The success in these areas signaled that the HRM was not just a better chatterbox, but a superior problem-solver in domains that demand systemic, rather than statistical, intelligence. This benchmark is the standard for measuring fluid intelligence in AI, and Sapient’s performance marked a huge leap for efficient, general systems.

Head-to-Head Performance Analysis Against Industry Giants

Talk is cheap in Silicon Valley, but on the global AI leaderboards, the numbers do not lie. The true test of the HRM was placing its 27-million-parameter prototype directly against the multi-trillion-parameter flagships of the established AI giants.. Find out more about AI founders rejected Elon Musk multimillion-dollar offer tips.

Outclassing Established Models in Comparative Testing

The moment of truth arrived when the HRM’s performance was directly contrasted with the leading commercial offerings from the most significant players in the AI space. The scores achieved by the twenty-seven million parameter prototype were not marginal; they represented a clear and decisive defeat on the defined reasoning tests. For instance, when measured against OpenAI’s recognized baseline model, designated as o3-mini-high, the HRM achieved a performance rating of forty point three percent compared to the benchmark model’s thirty-four point five percent.

This gap, while seemingly small in absolute terms, signifies a substantial difference in the underlying quality of reasoning and problem-solving fidelity. It indicates that the architectural shift implemented by Sapient Intelligence provided a qualitative leap that simply could not be overcome by further scaling of the existing OpenAI methodology. The performance delta suggests a fundamentally different level of cognitive capability had been successfully engineered into the new system, establishing a new, higher watermark for what is achievable in the current technological era. For context, in the broader landscape of late 2025, a top Google model in an optional “Deep Think” mode reached 45.1% on ARC-AGI, suggesting the HRM is right in the thick of the absolute cutting edge with far less overhead.

The Specific Defeat of Contemporary Cutting-Edge Systems

The domination was not limited to just one competitor. The test results systematically placed the Hierarchical Reasoning Model above other state-of-the-art systems. Anthropic’s recent advanced iteration, Claude Three point seven, managed only a twenty-one point two percent score in the same rigorous evaluation, demonstrating a significant performance gap against the newcomer.

More pointedly, the score against DeepSeek’s highly competitive system, specifically their R1 model, which had generated considerable industry buzz for its capabilities and cost-effectiveness, was a mere one point five percent when compared to the HRM’s 40.3%. The relative score difference is staggering: the HRM is orders of magnitude better at structured reasoning than this particular contemporary challenger.

These direct comparisons across the leading labs—OpenAI, Anthropic, and DeepSeek—provided irrefutable, multi-source validation that Sapient Intelligence had developed an AI model that was, by the specific metrics of abstract and structured reasoning, the current global leader. This confirmed that the decision to eschew the path of large-scale parameter growth in favor of architectural innovation had paid off in the most tangible way possible: demonstrably better performance. You can review the competitive environment by examining the state of contemporary cutting-edge systems.

The Long-Term Trajectory: The Pursuit of True Cognition. Find out more about AI founders rejected Elon Musk multimillion-dollar offer strategies.

For Chen and Wang, the HRM’s success is less a finish line and more a confirmation of their map. Their ambition is not to build the next slightly better chatbot; it is to unlock the next level of machine intelligence.

Defining the Path to Artificial General Intelligence

For the founders, the successful demonstration of the Hierarchical Reasoning Model is not an endpoint, but a crucial validation of their chosen methodology on the path toward true Artificial General Intelligence. Their ultimate thesis is that AGI will not spontaneously arise from the ever-increasing size of current statistical models; rather, it will emerge from the engineering of superior, brain-inspired computational structures that inherently support generalized thinking, self-correction, and complex planning. They view their current work as having successfully cracked a fundamental problem in cognitive architecture, which now serves as the robust foundation upon which the next layers of complexity can be built without hitting the same structural limitations that constrain their rivals.

The pursuit is therefore scientific and existential: they feel a duty to push this more promising technology to fruition. They recognize that the development of AGI is an inevitable, world-shaping event, and they believe that their specific, efficient, and reason-oriented architecture is the most responsible and promising avenue to guide that development toward a beneficial outcome. This responsibility informs their entire corporate ethos. For more on how to view this progression, check out the latest discussions on the future of foundational AI research and development.

The Projected Timeline for Human-Level Cognitive Function

Driven by the profound success of their small prototype, the confidence within Sapient Intelligence regarding the timeline for achieving AGI has become notably more aggressive. The founders publicly express a belief that the emergence of an artificial intelligence capable of outperforming human intellect across virtually all economically valuable tasks is not a distant dream spanning decades, but an event potentially visible within the next ten years. This timeline is predicated on the assumption that their current architectural blueprint can be successfully scaled and iterated upon, carrying its foundational reasoning advantages forward.

They articulate a sense of urgency, often referencing the concept of “Pandora’s box,” implying that if they do not achieve this breakthrough responsibly, someone else surely will, perhaps without the same commitment to thoughtful, principled development. This ambition underscores the high-stakes nature of their work and explains their initial decision to reject outside influence: they see themselves as being at the vanguard of a crucial technological moment, one that requires absolute fidelity to their core scientific vision. This is not just about being first; it is about setting the right course for the destination.. Find out more about AI founders rejected Elon Musk multimillion-dollar offer overview.

Actionable Advice for Founders on Vision Fidelity:

Broader Implications for the Technology Sector

The success of Sapient Intelligence isn’t just a good story; it’s a tectonic shift that revalues the core assets of the entire machine learning industry.

Redefining Investment Paradigms in Machine Learning

The performance differential demonstrated by the HRM—superior results from a model orders of magnitude smaller—carries massive implications for the venture capital and corporate investment strategies that have fueled the AI boom. The established norm has been to fund companies based on the magnitude of their data moats and their GPU cluster capacity, effectively treating AI development as a battle of resource attrition. This has created a moat based purely on expense.. Find out more about Hierarchical Reasoning Model 27 million parameters performance definition guide.

The success of Sapient Intelligence introduces a powerful counter-narrative: that architectural ingenuity can triumph over brute-force spending. This finding will inevitably force a major recalibration of due diligence for investors. Future funding rounds will likely scrutinize the underlying mathematical and architectural novelty of a company’s core model far more closely than the sheer number of GPUs they can afford to purchase. This shift could democratize AI innovation, lowering the barrier to entry by prioritizing clever engineering solutions over simply having the deepest pockets, potentially unleashing a wave of innovation from smaller, academically rigorous teams that previously felt locked out of the top tier.

Consider the accessibility: a model that performs at the cutting edge without needing a multi-trillion-dollar training run suggests that the next wave of breakthroughs might come from smaller, more focused labs, rather than only the established hyperscalers. This is a massive signal to the VC world to look beyond the “bigger is better” mantra and dig into the core science.

The Future of Foundational AI Research and Development

The ultimate impact of this story will be felt most keenly in the research departments of every major technology firm. The widespread belief that the transformer model, scaled to the maximum, represented the final viable path to advanced AI is now severely undermined. This necessitates a mass re-evaluation of research priorities globally. Labs that were solely focused on scaling will now be forced to divert significant resources into exploring alternative computational substrates, including bio-inspired designs, causal modeling, and explicit reasoning engines, mirroring the work done at Sapient Intelligence.

This story serves as a potent, public, and performance-based proof point that the intellectual frontier lies in architecture, not just size. It encourages a return to foundational computer science and cognitive theory, signaling a potential end to the era of purely empirical, scale-driven AI development and ushering in a new age of scientifically grounded, architecturally intentional machine intelligence design. The entire research community is now watching to see how quickly the incumbents can pivot to incorporate the lessons learned from the quiet refusal of a multimillion-dollar check. For a look at how other leading models are adapting to the complexity, review the advancements in agentic computer use, which often require similar reasoning depth.

Conclusion: The New Math of Intelligence

The tale of William Chen, Guan Wang, and Sapient Intelligence is more than just a great founder story; it is a hard data point that challenges the core operating assumption of the past decade in artificial intelligence. They proved that intelligence is not purely a function of parameter count, but a product of thoughtful, hierarchical design. They turned down a king’s ransom to pursue a better, more efficient blueprint for thought itself.

The HRM, with its 27 million parameters, besting models hundreds of times its size on hard reasoning tasks like the ARC-AGI-1 benchmark (40.3% vs. 34.5% for o3-mini-high), is the proof. They have thrown down the gauntlet: The race for AGI will be won by the most elegant architecture, not the deepest pockets.

Key Takeaways & Your Next Steps:

What do you think? Has the era of “Bigger is Better” officially ended, or is the HRM just an outlier? Drop your thoughts in the comments below. The next phase of the AI revolution is being architected right now—are you watching the builders or just the budgets?

To stay ahead of this architectural shift, make sure you keep up with the latest in LLM expansion and its critiques, as this race is far from over. .